moabb.datasets.base.BaseDataset#

- class moabb.datasets.base.BaseDataset(subjects, sessions_per_subject, events, code, interval, paradigm, doi=None, unit_factor=1000000.0)[source]#

Abstract Moabb BaseDataset.

Parameters required for all datasets

- Parameters

subjects (List of int) – List of subject number (or tuple or numpy array)

sessions_per_subject (int) – Number of sessions per subject (if varying, take minimum)

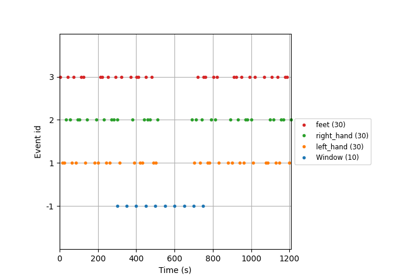

events (dict of strings) – String codes for events matched with labels in the stim channel. Currently imagery codes codes can include: - left_hand - right_hand - hands - feet - rest - left_hand_right_foot - right_hand_left_foot - tongue - navigation - subtraction - word_ass (for word association)

code (string) – Unique identifier for dataset, used in all plots. The code should be in CamelCase.

interval (list with 2 entries) – Imagery interval as defined in the dataset description

paradigm (['p300','imagery', 'ssvep']) – Defines what sort of dataset this is

doi (DOI for dataset, optional (for now)) –

- abstract data_path(subject, path=None, force_update=False, update_path=None, verbose=None)[source]#

Get path to local copy of a subject data.

- Parameters

subject (int) – Number of subject to use

path (None | str) – Location of where to look for the data storing location. If None, the environment variable or config parameter

MNE_DATASETS_(dataset)_PATHis used. If it doesn’t exist, the “~/mne_data” directory is used. If the dataset is not found under the given path, the data will be automatically downloaded to the specified folder.force_update (bool) – Force update of the dataset even if a local copy exists.

update_path (bool | None Deprecated) – If True, set the MNE_DATASETS_(dataset)_PATH in mne-python config to the given path. If None, the user is prompted.

verbose (bool, str, int, or None) – If not None, override default verbose level (see

mne.verbose()).

- Returns

path – Local path to the given data file. This path is contained inside a list of length one, for compatibility.

- Return type

- download(subject_list=None, path=None, force_update=False, update_path=None, accept=False, verbose=None)[source]#

Download all data from the dataset.

This function is only useful to download all the dataset at once.

- Parameters

subject_list (list of int | None) – List of subjects id to download, if None all subjects are downloaded.

path (None | str) – Location of where to look for the data storing location. If None, the environment variable or config parameter

MNE_DATASETS_(dataset)_PATHis used. If it doesn’t exist, the “~/mne_data” directory is used. If the dataset is not found under the given path, the data will be automatically downloaded to the specified folder.force_update (bool) – Force update of the dataset even if a local copy exists.

update_path (bool | None) – If True, set the MNE_DATASETS_(dataset)_PATH in mne-python config to the given path. If None, the user is prompted.

accept (bool) – Accept licence term to download the data, if any. Default: False

verbose (bool, str, int, or None) – If not None, override default verbose level (see

mne.verbose()).

- get_data(subjects=None, cache_config=None, process_pipeline=None)[source]#

Return the data corresponding to a list of subjects.

The returned data is a dictionary with the following structure:

data = {'subject_id' : {'session_id': {'run_id': run} } }

subjects are on top, then we have sessions, then runs. A sessions is a recording done in a single day, without removing the EEG cap. A session is constitued of at least one run. A run is a single contiguous recording. Some dataset break session in multiple runs.

Processing steps can optionally be applied to the data using the

*_pipelinearguments. These pipelines are applied in the following order:raw_pipeline->epochs_pipeline->array_pipeline. If a*_pipelineargument isNone, the step will be skipped. Therefore, thearray_pipelinemay either receive amne.io.Rawor amne.Epochsobject as input depending on whetherepochs_pipelineisNoneor not.- Parameters

subjects (List of int) – List of subject number

cache_config (dict | CacheConfig) – Configuration for caching of datasets. See

CacheConfigfor details.process_pipeline (Pipeline | None) – Optional processing pipeline to apply to the data. To generate an adequate pipeline, we recommend using

moabb.utils.make_process_pipelines(). This pipeline will receivemne.io.BaseRawobjects. The steps names of this pipeline should be elements ofStepType. According to their name, the steps should either return amne.io.BaseRaw, amne.Epochs, or anumpy.ndarray(). This pipeline must be “fixed” because it will not be trained, i.e. no call tofitwill be made.

- Returns

data – dict containing the raw data

- Return type

Dict

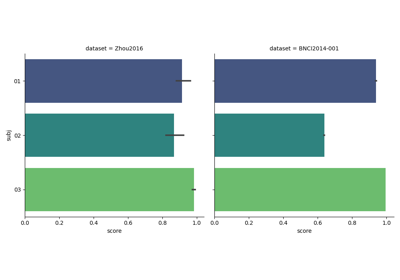

Examples using moabb.datasets.base.BaseDataset#

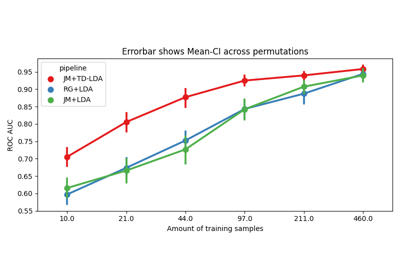

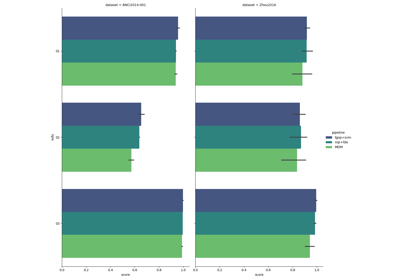

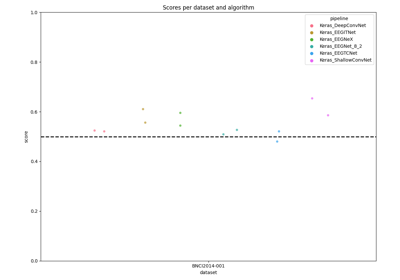

Benchmarking on MOABB with Tensorflow deep net architectures