moabb.evaluations.CrossSessionEvaluation#

- class moabb.evaluations.CrossSessionEvaluation(paradigm, datasets=None, random_state=None, n_jobs=1, overwrite=False, error_score='raise', suffix='', hdf5_path=None, additional_columns=None, return_epochs=False, return_raws=False, mne_labels=False, n_splits=None, save_model=False, cache_config=None, optuna=False, time_out=900)[source]#

Cross-session performance evaluation.

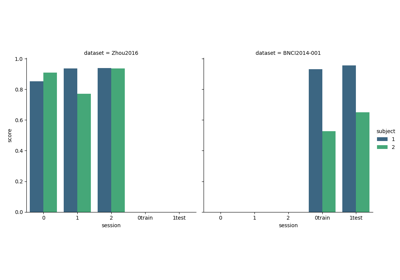

Evaluate performance of the pipeline across sessions but for a single subject. Verifies that there is at least two sessions before starting the evaluation.

- Parameters

paradigm (Paradigm instance) – The paradigm to use.

datasets (List of Dataset instance) – The list of dataset to run the evaluation. If none, the list of compatible dataset will be retrieved from the paradigm instance.

random_state (int, RandomState instance, default=None) – If not None, can guarantee same seed for shuffling examples.

n_jobs (int, default=1) – Number of jobs for fitting of pipeline.

overwrite (bool, default=False) – If true, overwrite the results.

error_score ("raise" or numeric, default="raise") – Value to assign to the score if an error occurs in estimator fitting. If set to ‘raise’, the error is raised.

suffix (str) – Suffix for the results file.

hdf5_path (str) – Specific path for storing the results and models.

additional_columns (None) – Adding information to results.

return_epochs (bool, default=False) – use MNE epoch to train pipelines.

return_raws (bool, default=False) – use MNE raw to train pipelines.

mne_labels (bool, default=False) – if returning MNE epoch, use original dataset label if True

save_model (bool, default=False) – Save model after training, for each fold of cross-validation if needed

cache_config (bool, default=None) – Configuration for caching of datasets. See

moabb.datasets.base.CacheConfigfor details.

Notes

New in version 1.1.0: Add save_model and cache_config parameters.

- evaluate(dataset, pipelines, param_grid, process_pipeline, postprocess_pipeline=None)[source]#

Evaluate results on a single dataset.

This method return a generator. each results item is a dict with the following conversion:

res = {'time': Duration of the training , 'dataset': dataset id, 'subject': subject id, 'session': session id, 'score': score, 'n_samples': number of training examples, 'n_channels': number of channel, 'pipeline': pipeline name}

- is_valid(dataset)[source]#

Verify the dataset is compatible with evaluation.

This method is called to verify dataset given in the constructor are compatible with the evaluation context.

This method should return false if the dataset does not match the evaluation. This is for example the case if the dataset does not contain enough session for a cross-session eval.

- Parameters

dataset (dataset instance) – The dataset to verify.